Description

Versatile AI system based on the world's most powerfull GPU

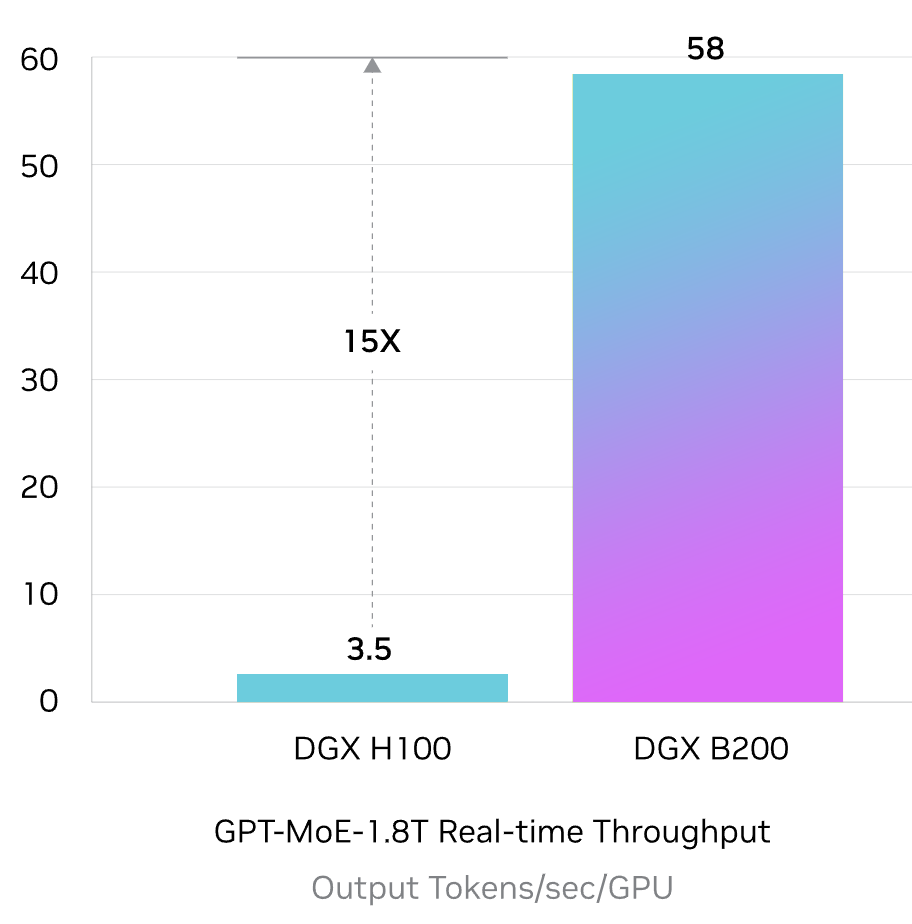

NVIDIA DGX B200 is built using the newest and most advanced technology, increasing training performance up to 3x and up to 15x higher inference compared to the previous generations.

NVIDIA DGX™ B200 is an unified AI platform for develop-to-deploy pipelines for businesses of any size at any stage in their AI journey. Leveraging the NVIDIA Blackwell GPU architecture, DGX B200 can handle diverse workloads making it ideal for businesses looking to accelerate their AI transformation.

Computing on the most powerful NVIDIA GPUs

Unified NVIDIA Ecosystem

One Platform for full stack development pipelines

Powerhouse of AI Performance

Proven Infrastructure Standard

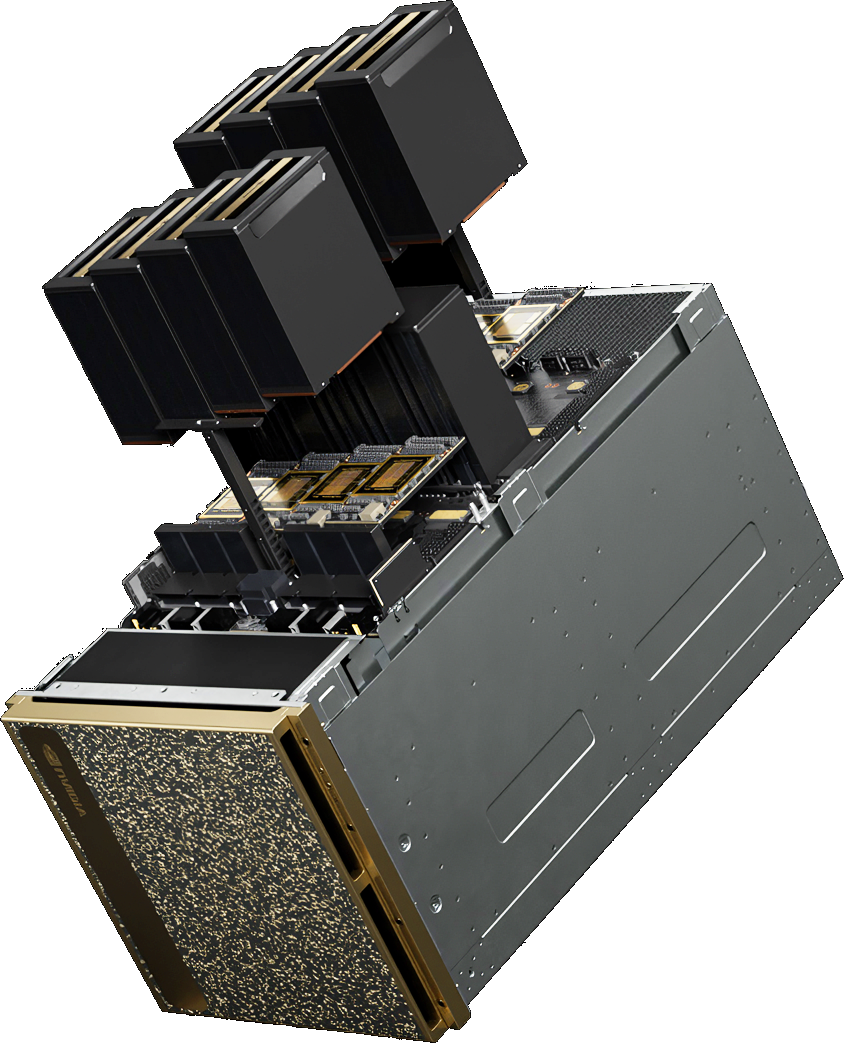

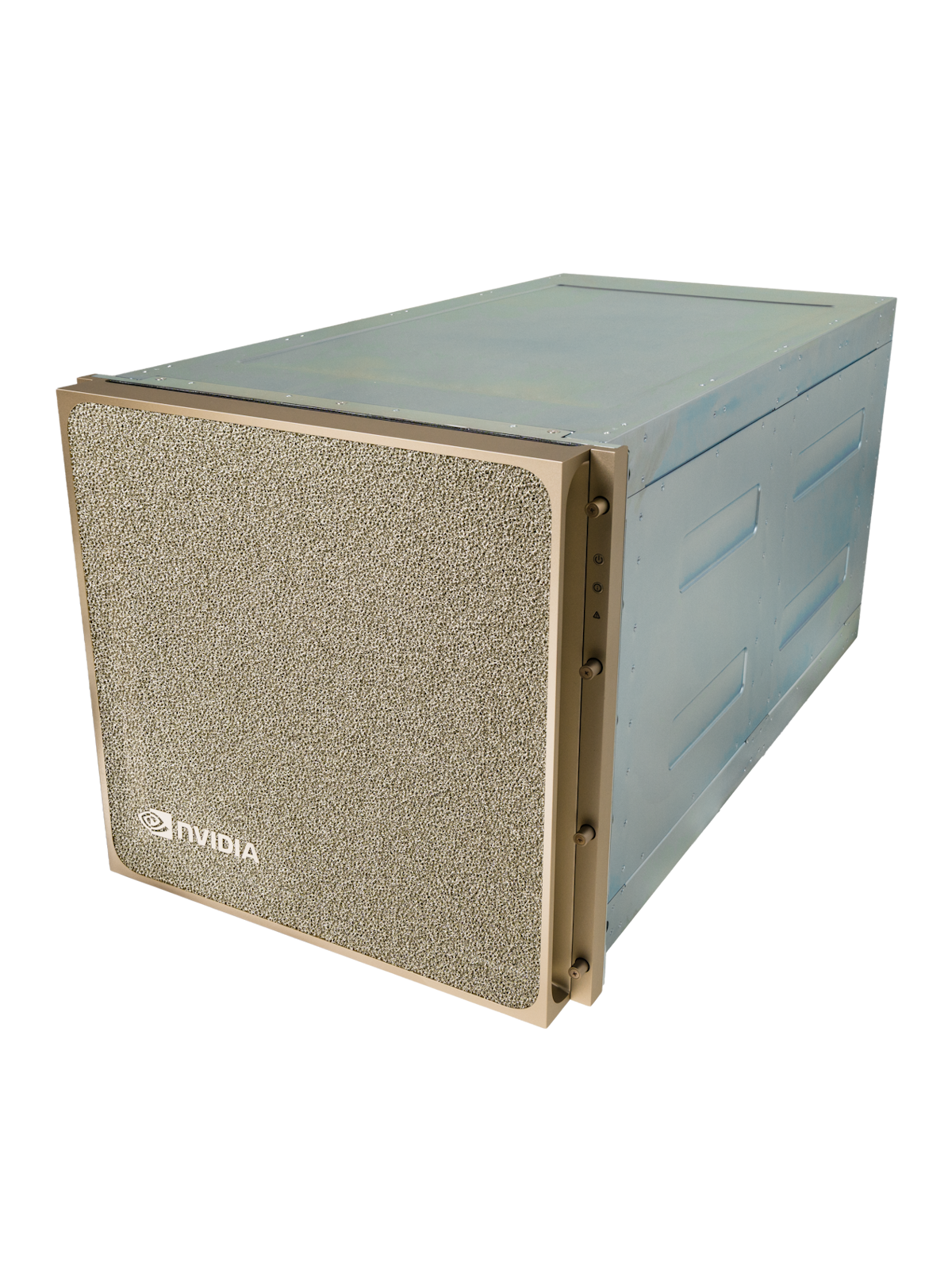

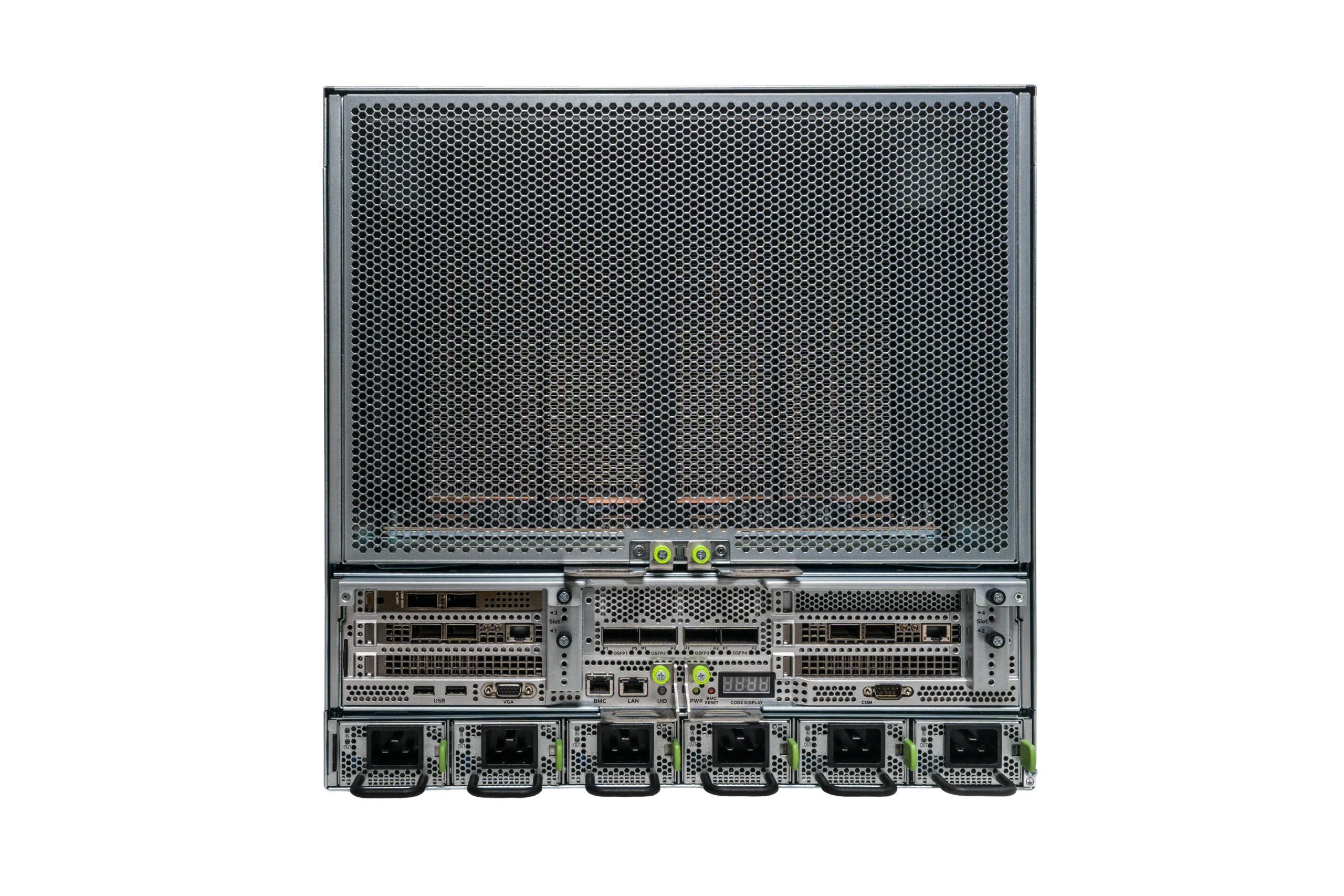

NVIDIA DGX B200

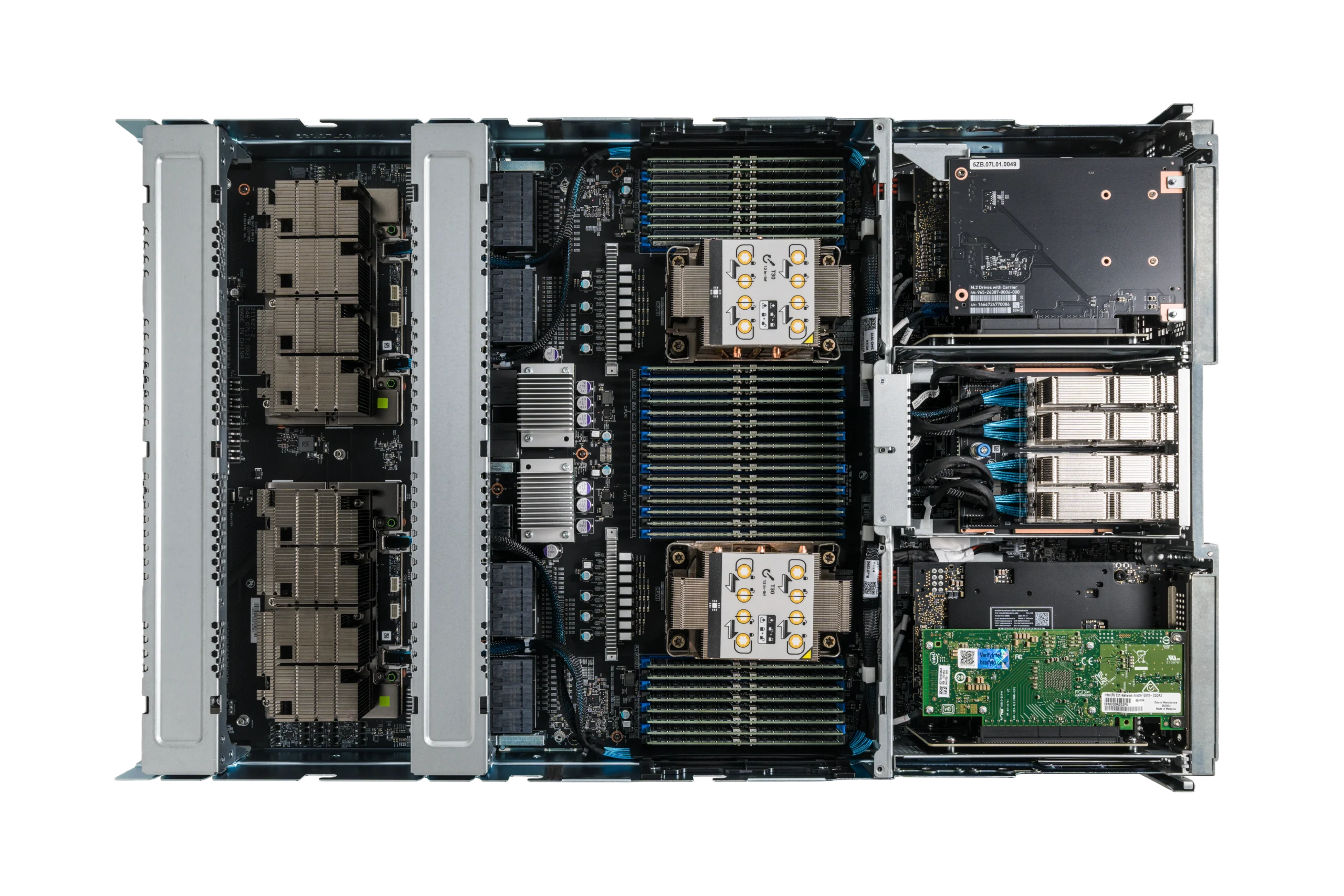

NVIDIA B200 GPU

8x NVIDIA B200 GPUs with 1440 GB total HBM3e GPU memory

NVLink & NVSwitch

6x NVIDIA NVSwitches with 4.8TB/s of bidirectional bandwidth

30TB Gen4 NVMe SSD

8× 3.84 TB with 50GB/s of peak bandwidth, 2x faster than Gen3 NVMe SSDs and 2× 1.92 TB NVMe for OS

Dual 56-core Intel CPUs

A total of 112 processor cores and up to 4TB of system memory

High-speed fabric

8x NVIDIA ConnectX-7 VPI with up to 400Gb/s InfiniBand / Ethernet

Optimized Software Stack

DGX OS, all necessary system software, GPU-accelerated applications and pre-trained models

Performance overview and cross-generation comparison

Real Time Large Language Model Inference

Supercharged AI Training Performance

Accelerated

Data Processing

Fastest Time to Solution

DGX B200 integrates a tested and optimized DGX software stack, including an AI-tuned base operating system, all necessary system software, and GPU-accelerated applications, pre-trained models, and more from NGC.

NVIDIA Enterprise Services provide support, education, and professional services for your DGX infrastructure. With NVIDIA experts available at every step of your AI journey, Enterprise Services can help you get your projects up and running quickly and successfully.

NVIDIA DGX Systems Provide

Full AI Software Stack

NVIDIA DGX server

The universal system for all AI workloads – from analytics to training to inference. DGX B200 sets a new bar for compute density, packing 72 petaFLOPS of AI training performance into a 10U form factor, replacing legacy compute infrastructure with a single, unified system.

NVIDIA DGX OS

NVIDIA DGX Systems comes with pre-installed NVIDIA DGX OS software stack – Ubuntu or RedHat Enterprise Linux, optimized drivers (GPU, networking), Docker engine, container management, system management, datacenter management tools and security features.

NVIDIA AI Enterprise

NVIDIA AI Enterprise offers end-to-end, secure, cloud-native suite of AI software which provides a large catalog of optimized and up-to-date data science libraries, tools, pre-trained models, frameworks, and more. The entire software stack is fully covered with NVIDIA enterprise support.

NVIDIA Mission controll

NVIDIA Mission controll is a software pack consisting of NVIDIA Base Command and NVIDIA Run:AI which provide together a solid software base for orchestration, Slurm, and Jupyter Notebook environments, delivering an easy-to-use, enterprise-proven scheduling and orchestration solution.

NGC applicatioin containers

NVIDIA NGC is public catalog of the most used AI frameworks, GPU accelerated applications, pre-trained AI models or workflows. You can run your AI workload right after delivery of your DGX system. NGC catalog is regurarly updated to always get the latest tuned software components for highest performance of your AI applications.

Supercomputing for any business with ease

NVIDIA DGX SuperPOD built on DGX B200 systems enables leading enterprises to deploy large-scale, turnkey infrastructure backed by NVIDIA’s AI expertise. Accelerate any of you projects with SuperPOD modular and versatile architecture and benefit from scalable performance to accelerate inference and training times.

Reviews

There are no reviews yet.