The latest experimental HPC and AI sytems at IT4I, Technical University of Ostrava

In addition to its large supercomputers (list), IT4Innovations also operates smaller complementary systems. These systems represent emerging, non-traditional or highly specialized hardware architectures not found in conventional supercomputing data centers. In the last days of October, Complementary Systems I was put into operation.

New programming models, libraries and application development tools are also deployed in complementary systems to extract maximum performance from the hardware. Complementary systems will thus provide scientific teams with the opportunity to test and compare experimental architectures with traditional ones (x86 + Nvidia GPUs) and the opportunity to optimize and accelerate computation in new research areas. The Complementary Systems I project, which went into operation in October, consists of several parts. They are based on servers from Hewlett Packard Enterprise and were delivered and implemented by M Computers from Brno.

Compute partition 1 – Arm A64FX processors

The compute nodes of the first part of Complementary Systems I are built on Arm A64FX processors with integrated fast HBM2 memory. It is essentially a fragment of the world’s most powerful supercomputer in recent years, Fukagu, at Japan’s RIKEN Center of Computational Science (currently the second most powerful supercomputer). The configuration consists of eight HPE Apollo 80 compute nodes interconnected by a 100Gb/s Infiniband network. Compute node configuration:

- 1× Arm A64FX, 48 cores, 2 GHz, 32GB HBM2 memory

- 400 GB SSD

- HDR Infiniband 100 Gb/s

Compute partition 2 – Intel processors, Intel PMEM, Intel FPGA (Altera)

The compute nodes in this part of the Complementary Systems are based on Intel technologies. The servers are equipped with third-generation Intel Xeon processors, persistent (non-volatile) Intel Optane memory with a total capacity of 2TB and 8TB per server, and Intel Stratix 10 MX2100 FPGA cards. This part consists of two HPE ProLiant DL380 Gen 10 Plus nodes in the configuration:

- 2× Intel Xeon Gold 6338, 32 cores, 2 GHz

- 256 GB RAM

- 8 TB and 2 TB Intel Optane Persistent Memory (NVDIMM)

- 2 TB NVMe SSD

- 2× FPGA Bittware 520N-MX (Intel Stratix 10)

- HDR Infiniband 100 Gb/s

Compute partition 3 – AMD processors, AMD accelerators, AMD FPGAs (Xilinx)

The third part of Complementary Systems is a figure on AMD technologies. The servers are equipped with third-generation AMD EPYC processors, four AMD Instinct MI100 GPU cards interconnected by a fast bus (AMD Infinity Fabric) and two Xilinx Alveo FPGA cards with different performance. Xilinx is one of AMD’s latest major acquisitions. This part consists of two HPE Apollo 6500 Gen 10+ nodes in the configuration:

- 2× AMD EPYC 7513, 32 cores, 2.6 GHz

- 256 GB RAM

- 2 TB NVMe SSD

- 4× AMD Instinct MI100 (AMD Infinity Fabric Link)

- FPGA Xilinx Alveo U250

- FPGA Xilinx Alveo U280

- HDR Infiniband 100 Gb/s

Software equipment

An important part of Complementary Systems I is software, which includes environments, compilers, numerical libraries or tools for algorithm development and debugging.

HPE Cray Programming Environment

HPE Cray Programming Environment is a comprehensive tool for developing HPC applications in heterogeneous environments. It supports all Complementary Systems architectures. It includes optimized libraries, support for the most widely used programming languages, and several tools for analyzing, debugging, and optimizing parallel algorithms. HPE support for the tools included in the Cray PE package is included.

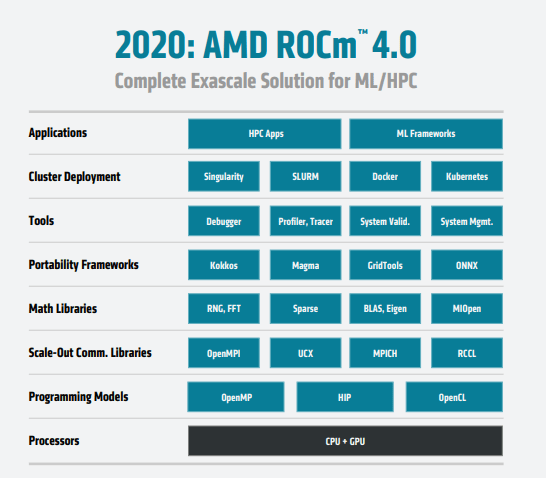

AMD ROCm

ROCm is AMD’s software package that includes programming models, development tools, libraries, or integration tools for the most widely used AI frameworks that run on top of AMD GP GPU accelerators.

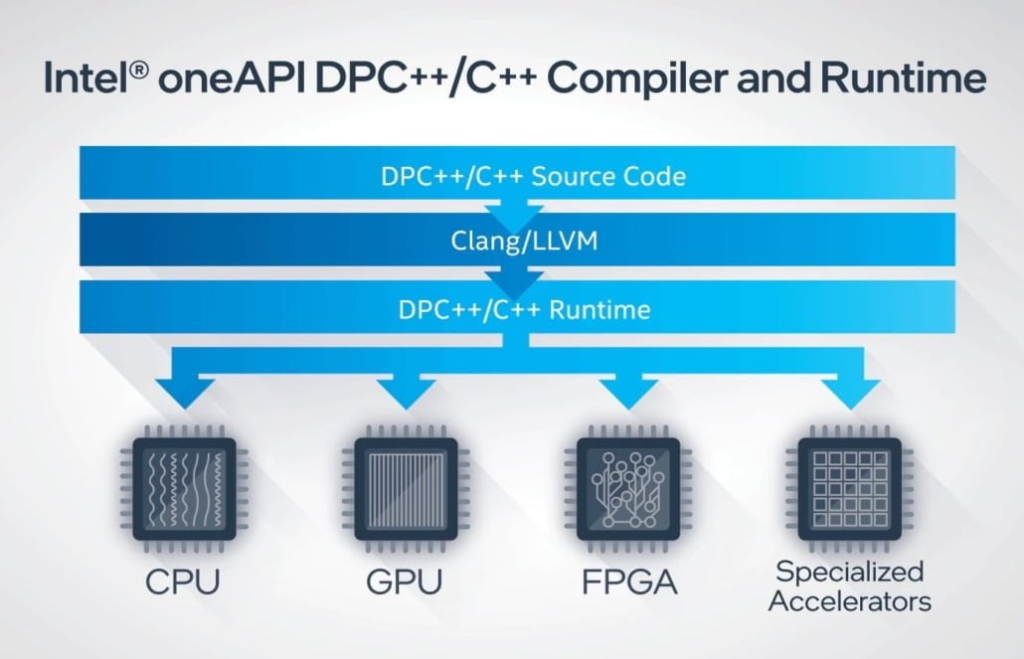

Intel oneAPI

OneAPI is Intel’s tool for developing applications deployed on heterogeneous platforms – e.g. CPUs, GPUs, FPGAs and more. In Complementary Systems, it is planned to be used mainly for FPGA cards.