Description

AI Server 4 GPU

Our AI Servers are the perfect solution for any of your HPC or AI applicatons. Based on ASUS ESC and RS series, we can provide you solution built and tuned exactly for your needs, so you won’t have to worry about underpowering your project or getting excessive hardware.

With the latest AMD CPUs and the worlds most powerful GPU accelerators from NVIDIA, you can start your HPC & AI journey right away.

Powered by the latest AMD EPYC™ Generation CPUs

Professional Solution

For AI Workloads

GPU-accelerated

GPU-accelerated AI Servers are suitable for a wide range of computing tasks due to their parallel capabilities and high performance.

QA & Testing

Turn to our experts to perform comprehensive, multi-stage testing and auditing of your software.

Reliable Hardware

We use only professional enterprise server components from renowned global manufacturers that are designed for 24/7 operation.

Best Performance

With the latest AMD processors and the world's most powerful graphics accelerators from NVIDIA, AI Servers deliver the highest performance.

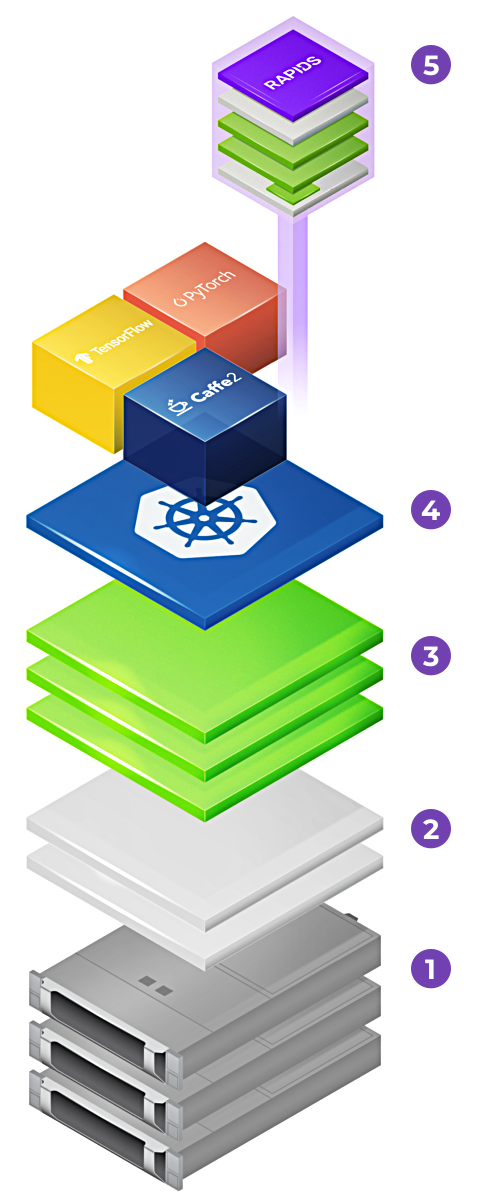

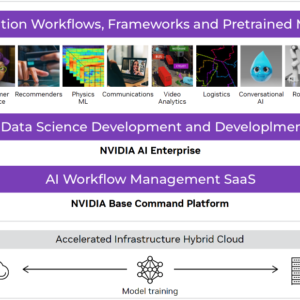

AI Software Stack

Our software portfolio covers dozens of AI use cases – just download application container to our AI Server and start to develop you own AI application.

Premium Support

All our AI servers come with 3 years warranty and 9x5 remote support. DGX systems offers premium support on both the HW and SW stack.

Quick guide

Not sure how to choose the right GPU card? Try our Quick guide or full GPU Selector.

Computing on the most powerful NVIDIA GPUs

| Workloads | GPU | AI Training | AI Inference | HPC | Rendering | Virtual workstation | Virtual desktop (VDI) |

|---|---|---|---|---|---|---|---|

| Compute | B300 262 GB SXM6 | ✓ | ✓ | ||||

| B200 180 GB SXM6 | ✓ | ✓ | |||||

| H200 NVL 141 GB | ✓ | ✓ | ✓ | ||||

| H100 80 GB (EOL) | ✓ | ✓ | ✓ | ||||

| Compute / Graphics | RTX PRO 6000 Blackwell 96 GB | ✓ | ✓ | ✓ | ✓ | ✓ | |

| RTX PRO 5000 Blackwell 72 GB | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| L40S 48 GB | ✓ | ✓ | |||||

| L40 48 GB | ✓ | ✓ | ✓ | ||||

| A16 64 GB | ✓ | ✓ | |||||

| Compute / Graphics (SFF*) | L4 24 GB | ✓ | ✓ | ✓ | ✓ |

We Provide Full

AI software stack

GPU server

You can configure your GPU based server in the AI server configurator based on your needs or we can advise you the best configuration upon request and needs.

Operating system

AI server comes with pre-installed Ubuntu Linux operating system and Docker engine to run application containers on top of your GPU server.

NVIDIA GPU Operator

We provide you several components – Nvidia driver, Nvidia container runtime and Nvidia GPU device plug-in for Kubernetes in the AI software stack layer.

Kubernetes

Kubernates is open-source platform for orchestration of application containers. You can easily deploy, scale, and easily manage your entire AI infrastructure.

NGC applicatioin containers

NVIDIA NGC is public catalog of the most used AI frameworks, GPU accelerated applications, AI models or workflows. You can start to run your AI workload right after delivery of your AI server. NGC catalog is regurarly updated to always get the latest and compatible software components for highest performance of your AI applications.

Reviews

There are no reviews yet.