Description

The Universal System for Every AI Workload

As the fourth generation of the world’s first purpose-built AI infrastructure, DGX H200 is designed to be the centerpiece of an enterprise AI center of excellence.

It’s a fully optimized hardware and software platform that includes full support for the new range of NVIDIA AI software solutions, a rich ecosystem of third-party support, and access to expert advice from NVIDIA professional services.

Computing on the most powerful NVIDIA GPUs

NVIDIA AI software solutions The Cornerstone of Your AI Center of Excellence

DGX H200 is a fully integrated hardware and software solution on which to build your AI Center of Excellence. It includes NVIDIA Base Command™ and the NVIDIA AI Enterprise software suite, plus expert advice from NVIDIA DGXperts.

Leadership-Class Infrastructure on Your Terms

Get the power of DGX H200 in a multitude of ways that fit your business: on premises, co-located, rented from managed service providers, and more. And with DGX-Ready Lifecycle Management, organizations get a predictable financial model to keep their deployment at the leading edge.

Break Through the Barriers to AI at Scale

NVIDIA DGX H200 features 6X more performance, 2X faster networking, and high-speed scalability. Its architecture is supercharged for the largest workloads such as generative AI, natural language processing, and deep learning recommendation models.

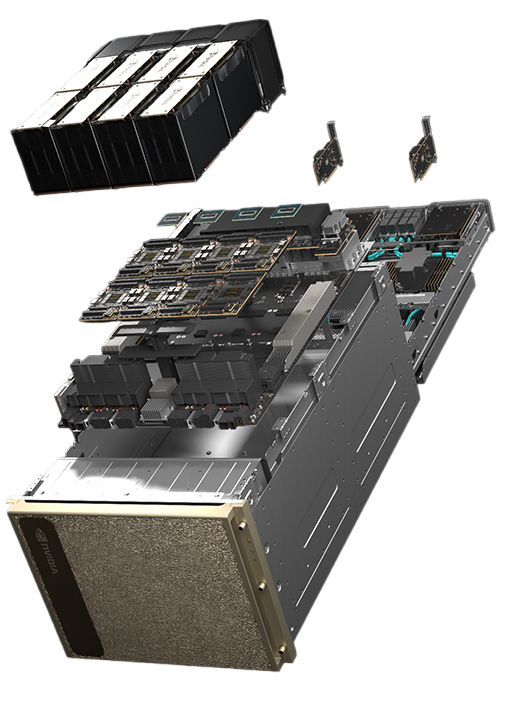

NVIDIA DGX H200

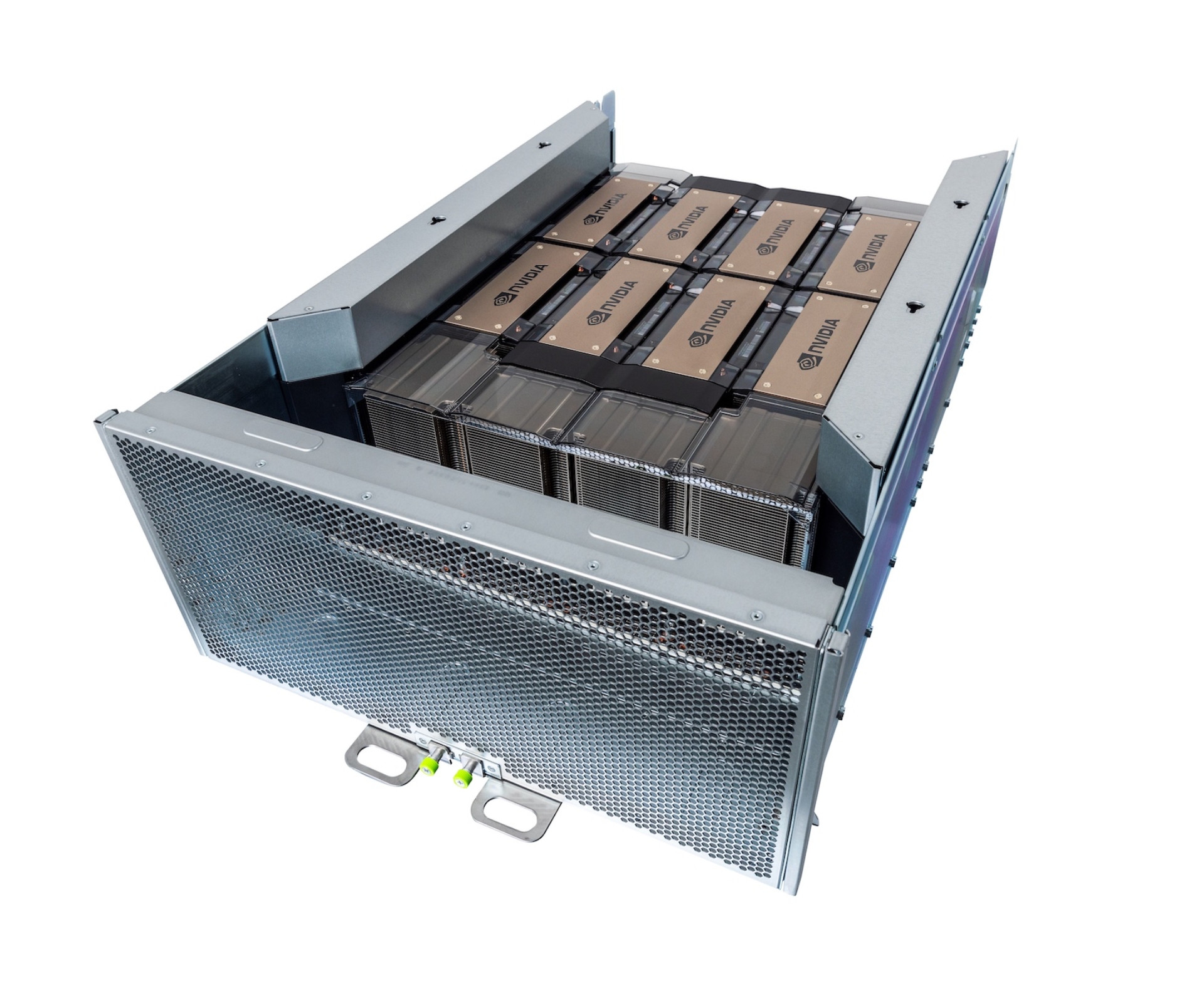

NVIDIA H200 SXM5 GPUs

8x NVIDIA H200 with 1128GB total GPU memory, 18x NVLink connections per GPU with 900 GB/s GPU-to-GPU bandwidth

NVLink & NVSwitch

4x NVIDIA NVSwitches with 7.2TB/s of bidirectional bandwidth

30TB Gen4 NVMe SSD

8× 3.84 TB with 50GB/s of peak bandwidth, 2x faster than Gen3 NVMe SSDs and 2× 1.92 TB NVMe for OS

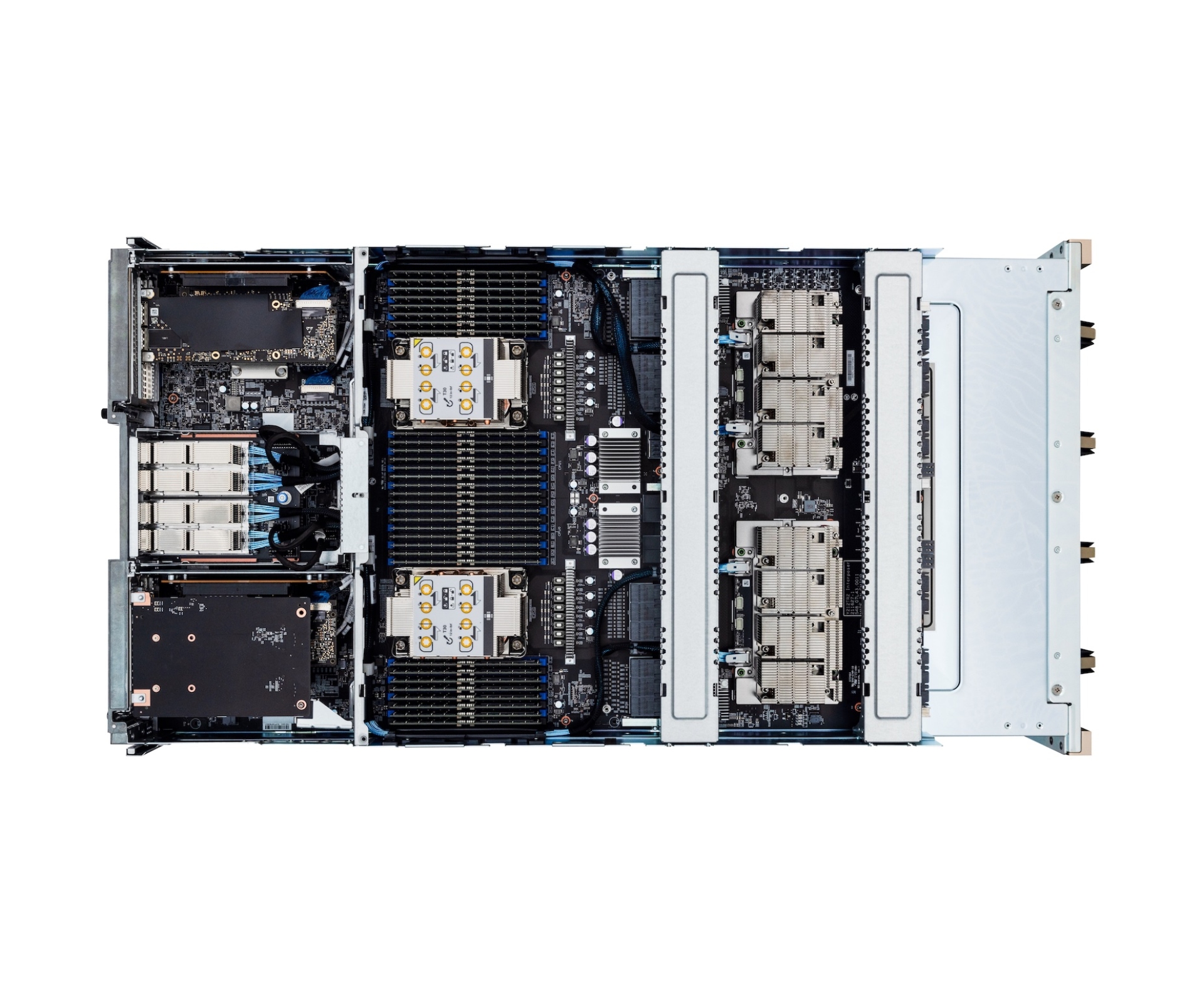

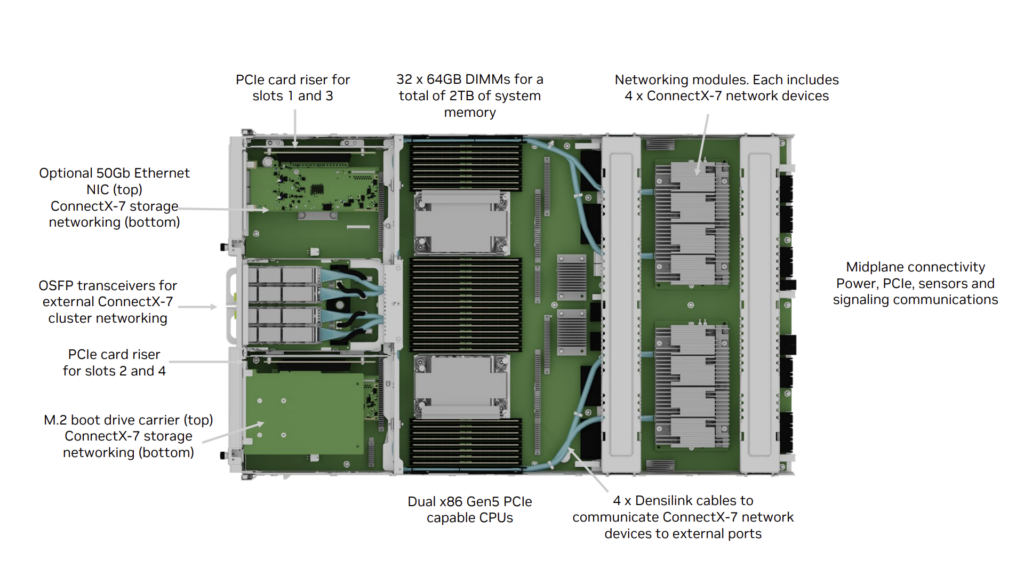

Dual Intel Xeon Platinum 8480C CPU

A total of 112 processor cores and 2TB of system memory

High-speed fabric

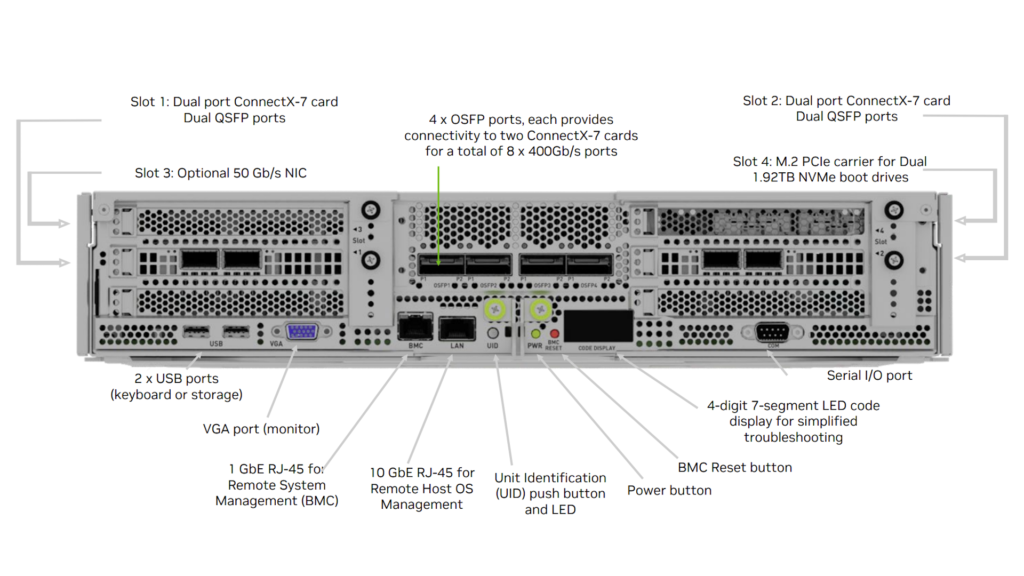

10x NVIDIA ConnectX-7 with 400Gb/s InfiniBand / Ethernet network interface

Optimized Software Stack

DGX OS, all necessary system software, GPU-accelerated applications and pre-trained models

Through a combination of tuned hardware, software and NVIDIA support, NVIDIA DGX delivers significantly higher performance and acceleration in the learning phase of machine learning applications.

Technical Specifications

| NVIDIA DGX H200 1128 GB | |

|---|---|

| GPUs | 8× NVIDIA H200 SXM5 141GB |

| GPU memory | 1128 GB |

| CPU | Dual Intel Xeon Platinum 8480C CPU, (112 cores) 2.00 GHz (Base), 3.80 GHz (Max Boost) |

| Performance | 32 PetaFLOPS (FP8) |

| # CUDA cores | 135 168 |

| # Tensor cores | 4 224 |

| Multi-instantce GPU | 56 instances |

| RAM | 2 TB |

| HDD | OS: 2× 1.92 TB NVMe DATA: 30 TB (8× 3.84 TB) NVMe |

| Networking | 8x single-port ConnectX-7 VPI 400 Gb/s InfiniBand/ 200Gb/s Ethernet 2x dual-port ConnectX-7 VPI 400 Gb/s InfiniBand/ 200Gb/s Ethernet |

| Max. power usage | ~10,2 kW max |

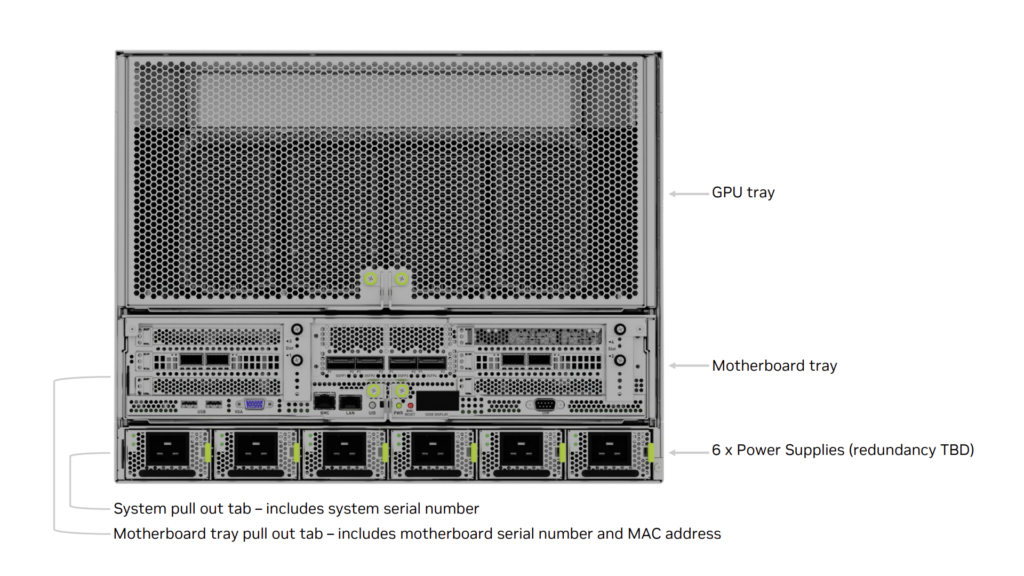

| Form factor | rack, 8U |

| Datasheet | Download |

Fastest Time to Solution

DGX H200 integrates a tested and optimized DGX software stack, including an AI-tuned base operating system, all necessary system software, and GPU-accelerated applications, pre-trained models, and more from NGC.

NVIDIA Enterprise Services provide support, education, and professional services for your DGX infrastructure. With NVIDIA experts available at every step of your AI journey, Enterprise Services can help you get your projects up and running quickly and successfully.

NVIDIA DGX Systems Provide

Full AI Software Stack

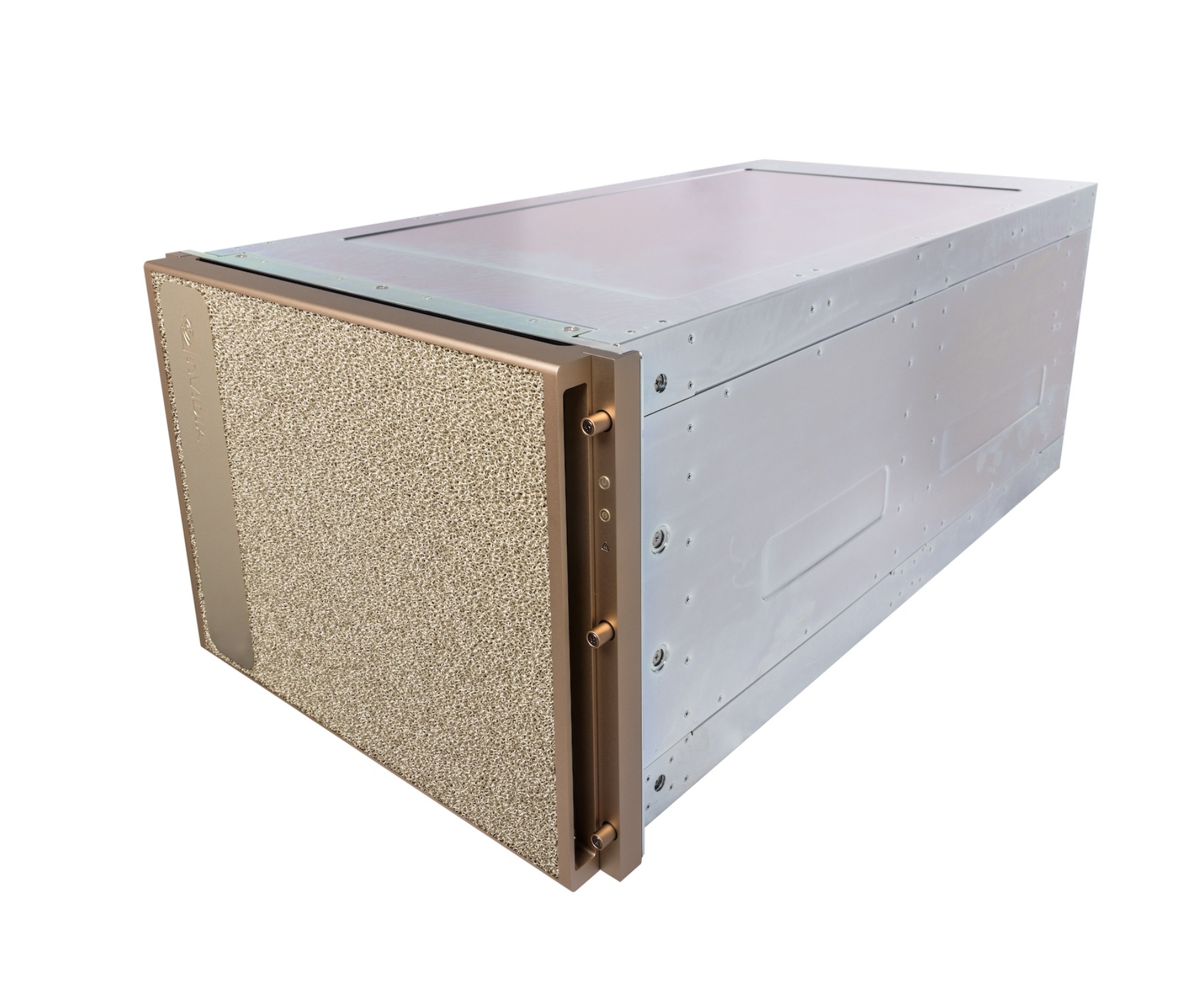

NVIDIA DGX server

The universal system for all AI workloads – from analytics to training to inference. DGX H200 sets a new bar for compute density, packing 32 petaFLOPS of AI performance into a 8U form factor, replacing legacy compute infrastructure with a single, unified system.

NVIDIA DGX OS

NVIDIA DGX Systems comes with pre-installed NVIDIA DGX OS software stack – Ubuntu or RedHat Enterprise Linux, optimized drivers (GPU, networking), Docker engine, container management, system management, datacenter management tools and security features.

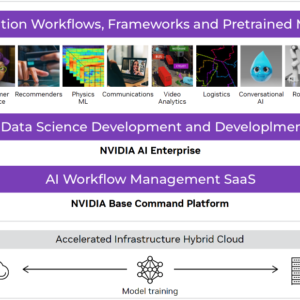

NVIDIA AI Enterprise

NVIDIA AI Enterprise offers end-to-end, secure, cloud-native suite of AI software which provides a large catalog of optimized and up-to-date data science libraries, tools, pre-trained models, frameworks, and more. The entire software stack is fully covered with NVIDIA enterprise support.

NVIDIA Base Command

NVIDIA Base Command provides Kubernetes (platform for orchestration of application containers), Slurm, and Jupyter Notebook environments, delivering an easy-to-use, enterprise-proven scheduling and orchestration solution based on well-established enterprise standards.

NGC application containers

NVIDIA NGC is public catalog of the most used AI frameworks, GPU accelerated applications, pre-trained AI models or workflows. You can run your AI workload right after delivery of your DGX system. NGC catalog is regurarly updated to always get the latest tuned software components for highest performance of your AI applications.

Reviews

There are no reviews yet.