RESEARCHERS FROM THE UNIVERSITY OF OSTRAVA TRAIN AI ON THE NVIDIA SYSTEM

The Institute for Research and Applications of Fuzzy Modeling of the University of Ostrava was the first in the Czech Republic to acquire the latest supercomputer for artificial intelligence – NVIDIA DGX Station A100. And while the whole system isn’t much bigger than a regular desktop computer, don’t be fooled by its size. Beneath the golden case lies the performance of an incredible 2.5 petaFLOPS for AI.

About the customer

We delivered the NVIDIA DGX Station A100 to a team of academic researchers from the Institute for Research and Applications of Fuzzy Modeling. They are a team of academic researchers working on problems concerning computer vision and 3D computer graphics. Their work is divided into purely academic research and designing solutions for the industrial sector. Solutions for the industrial sector mainly focus on defectoscopy of products, especially automotive products, and rely on deep neural networks. In academic research, they study data and its role, their pre/post processing, anonymization, and developing new loss functions. You can find their work on the project page.

The Institute for Research and Applications of Fuzzy Modeling (IRAFM) is a scientific place of work belonging to the University of Ostrava in Ostrava, Czech Republic. It is focused on theoretical research and practical development of various methods of fuzzy modeling, i.e. special mathematical methods which make it possible to develop models that cope with imprecise information.

The ideal shared solution for a scientific team

The scientific team is equipped with standard office desktops used for prototyping and testing applications. These applications that mainly consist of deep neural networks are later migrated to NVIDIA DGX Station A100 with four GPU cards NVIDIA A100 40GB. Every team member has remote access to the Station and therefore uses it for long-lasting computations. DGX station also acts as a fast data storage/exchange device.

“Due to DGX Station’s high computation capability, we are able to select proper network parameters from a much larger search space, which directly translates to a better quality solution. This is amplified by training networks on bigger datasets in more iterations than it would be possible on a standard desktop GPU in the same amount of time. As a result, the customer receives a better solution in a shorter time,” says Petr Hurtík, the team leader.

BUSINESS CHALLENGES

✓ Being able to train big deep learning models thanks to GPUs memory size.

✓ Faster processing of big datasets due to having multiple GPUs.

✓ The capability of working on multiple projects simultaneously.

KEY NVIDIA SOLUTION POINTS

Excellent CUDA support

✓ The fast interface between GPUs

✓ Optimized for deep neural network training

BUSINESS AND PRODUCT RESULTS

✓ Laser welding and guiding of an industrial robot

✓ Simulation of a goniophotometer device

✓ Software for discovering neuro-degenerative diseases

PROCESSING ENGINES USED

✓ TensorFlow / Keras

✓ PyTorch / PyTorch Lighting

✓ CUDA, cuDNN

SOFTWARE & TOOLS USED

✓ Nvidia Docker + Nvidia containers, TensorRT

✓ Detectron2, MMDetection

✓ NVIDIA Kaolin

NVIDIA PRODUCTS USED

✓ NVIDIA DGX Station A100 160GB

✓ NVIDIA RTX 3090

✓ NVIDIA Jetson Nano

Utilizing NVIDIA DGX Station A100 is one of the essential steps for a research team to compete globally.

Mgr. Petr Hurtík, Ph.D., team leader

Solution overview and description

The Institute for Research and Applications of Fuzzy Modeling of the University of Ostrava was the first in the Czech Republic to acquire the latest supercomputer for artificial intelligence – NVIDIA DGX Station A100. And although the whole system is not much bigger than a regular desktop computer, don’t be fooled by its size. Beneath the golden case lies the performance of an incredible 2.5 petaFLOPS for AI.

The Ampere generation brought the biggest increase in computing power in the field of AI, and if we compare the performance of the latest DGX Station A100 with the performance of the top supercomputer NVIDIA DGX-2, which we delivered in 2019 on IT4I (“NVIDIA DGX-2 on IT4Innovations“), the DGX Station would, perhaps surprisingly, win. In a theoretical performance of 2.5 petaFLOPS in FP16 precision calculations, the DGX Station with four Ampere A100 accelerators would clearly beat sixteen (!) V100 accelerators (Volta generation) with a maximum theoretical performance of “only” 2 petaFLOPS.

And if we look at it from the point of view of energy consumption, the difference is even more striking. For the NVIDIA DGX-2 supercomputer, with a form factor of 10U and a maximum consumption of 10 kW, we already need a rack cabinet in a well-cooled data center.

On the contrary, the compact DGX Station A100 with a maximum consumption of only 1.5 kW can be plugged into a standard socket and placed under a table in the office. The water-cooled design reliably cools the 64-core processor and four graphics cards even at the highest load.

The device has been designed so that it can be easily shared between multiple users or entire teams. The gold workstation sits on handy wheels for easier handling and also offers two 10Gb Ethernet ports and one dedicated management port for seamless network connection. The fact that the DGX Station is suitable for office environment is underlined by the fact that its noise level does not exceed 37dB at full operation.

| GPU | 4× NVIDIA A100 SXM4 40 GB |

| GPU MEMORY | 160 GB total (4x 40 GB) |

| # CUDA CORES | 27 648 |

| # TENSOR CORES | 1 728 |

| CPU | 1× AMD Epyc 7742, 64 cores |

| RAM | 512 GB DDR4 (8x 64 GB) |

| HDD | OS: 1x 1,92 TB NVMe DATA: 1x 7,68 TB U.2 NVMe |

| NETWORK | 2x 10 Gb/s Ethernet 1x 1 Gb/s Ethernet (mgmt) |

| GRAPHICS OUTPUT | 4x Mini DisplayPort 1x VGA |

| MAX. POWER CONSUMPTION | 1.5 kW |

| FORM FACTOR | Watercooled tower |

27 648

CUDA cores

1 728

Tensor cores

64

CPU cores

160 GB

GPU memory

512 GB

DDR4 RAM

1,92 TB

NVMe for OS

7,68 TB

NVMe for data

28

multiple GPU instances

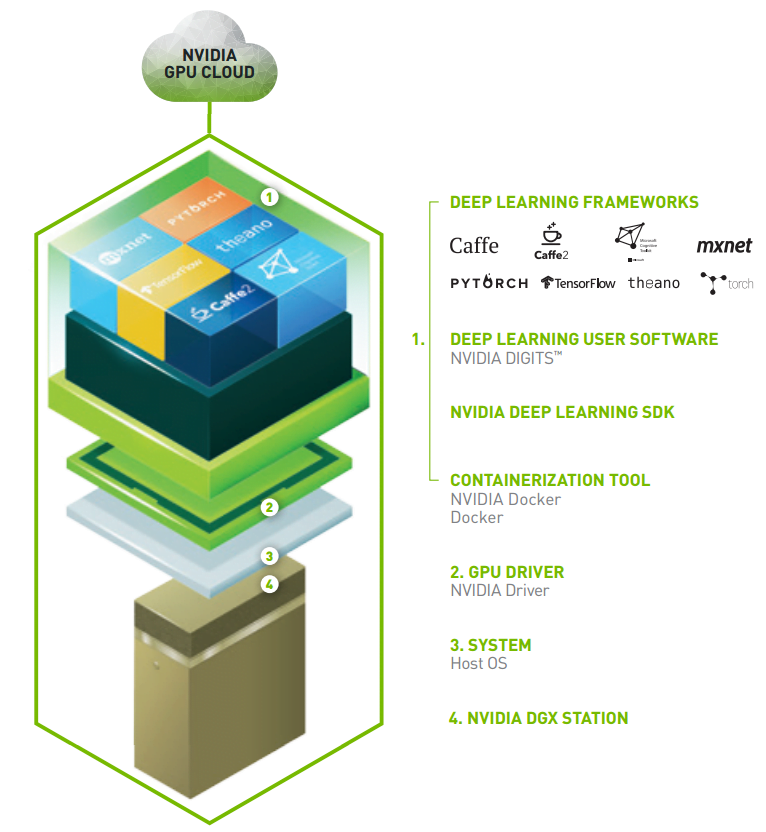

NVIDIA GPU Cloud (NGC)

NVIDIA GPU Cloud (NGC) represents the repository of the most used frameworks for machine learning and deep learning applications, HPC applications, or NVIDIA GPU cards accelerated visualization. Deploying these applications is a question of minutes — copying a link of the appropriate Docker image from the NGC repository, moving it on the DGX system, and downloading and running the Docker container.

The individual development environments – versions of all included libraries and frameworks, settings of environment parameters – are updated and optimized by NVIDIA for deployment on DGX systems. https://ngc.nvidia.com/

Software

What makes DGX systems the most different from bare-metal solutions is the software. All of them offer pre-installed and, above all, performance-tuned environments for machine learning (eg Caffe or Caffe 2, Theano, TensorFlow, Torch or MXNet) or an intuitive environment for data analytics (NVIDIA Digits). All of this is elegantly packed in Docker Containers. These constantly updated containers can be downloaded from the website NVIDIA GPU Cloud (NGC).

According to NVIDIA, such a tuned environment provides 30% higher performance for machine learning applications compared to applications deployed purely on NVIDIA hardware. However, the main advantage of a pre-installed environment is the speed of deployment, which can be fully operational in the order of hours.

Hardware and software support

The strength of the NVIDIA solution is also the support of the whole system. Fast hardware support (in case of failure of any of the components) is a matter of course.

Software support for the entire environment is critical if something does not work as intended. The customer has hundreds of developers ready to help. Support is included with the purchase of all NVIDIA DGX systems. It is available for 3-5 years and can be extended beyond this period.